Unlocking Your Inner App Developer: A Deep Dive into Google Labs' New AI Gem, Opal

Google Opal: FULLY FREE AI App Generator! In the rapidly evolving world of Large Language Models (LLMs), the lines between what you program and what you simply type are increasingly blurring. We’ve seen a massive transformation in how we interact with LLMs, from the early days of ChatGPT to the more advanced interfaces of Gemini and Claude. Now, Google Labs has thrown its hat into the ring with a new tool called Opal, designed to empower individuals to build their own LLM workflows without needing to code.

What is Opal? The No-Code LLM Workflow Creator

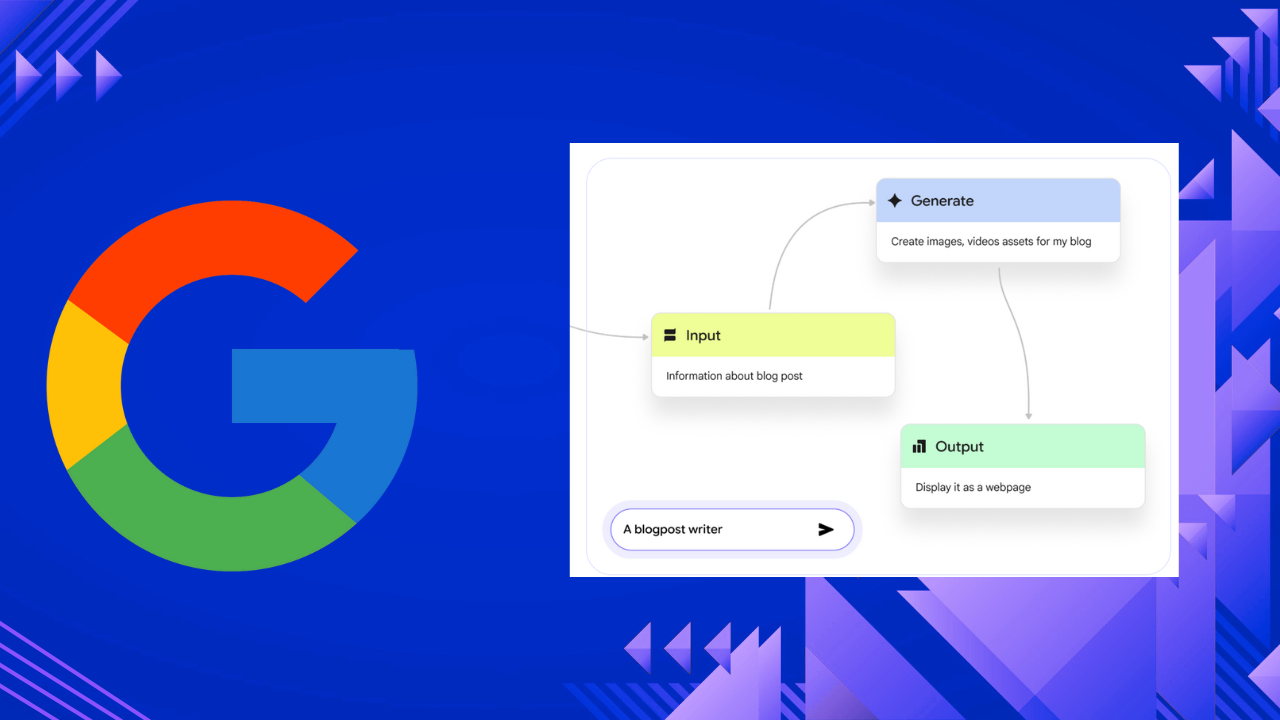

Opal is the latest public preview from Google Labs, a product-focused team known for developing innovative tools like Notebook LM. The core idea behind Opal is to make it exceptionally simple for users to build their own LLM workflows. Think of it as a visual builder where you can chain prompts together, utilize various LLM models, and integrate accessible Google tools.

It’s designed for prototyping workflows, creating proofs of concept, or quickly building mini-apps for specific needs. While not yet at the level of “hardcore agents,” Opal provides a powerful no-code way to craft, save, and reuse these workflows.

How Does Opal Work Its Magic?

Opal simplifies the creation of LLM-powered applications through an intuitive interface:

- Descriptive Creation or Remixing: You can either describe to Opal what you want to build (e.g., “a tool that does literature reviews on archive papers”) or start by remixing existing examples from its gallery. Opal will then map out a set of steps for your workflow.

- Chaining Steps and Tools: The workflows consist of various steps that can include:

- Tools: Such as searching the web to gather information.

- Gemini Models: Utilizing Gemini models (like Gemini 2.5 Flash, Gemini 2.5 Pro, VO, or LIA) to perform tasks like writing outlines, drafting blog posts, or generating images.

- Customization and Refinement: Opal offers extensive customization capabilities:

- Editing Steps: Users can easily go into individual steps and edit their logic or inputs.

- Model Selection: You can choose different LLM models for specific tasks within the workflow. For instance, while research might be done with Gemini 2.5 Flash, the writing could be done with Gemini 2.0 Flash, and image generation might use Image Gen 4.

- Input Wiring: A crucial feature is the ability to pass inputs between different nodes (steps). For example, you can add a new user input like “reader persona” and then wire that input to influence both the research and the final blog post generation, ensuring the output is heavily customized for the intended audience.

- Transparency: A console view allows you to see exactly what each step is doing, including the specific model calls and research findings, which can be an excellent learning tool for understanding how agents and workflows are constructed.

- Example: The Blog Post Generator

- Opal’s blog post generator example demonstrates its capabilities well. It takes a topic, searches the web for research, writes an outline, drafts the post, and even creates a banner image.

- Users can then refine this. For example, by changing the image generator to Image Gen 4, you can instruct it to embed the topic text directly into the banner image. This level of detail shows the power of the tool.

Baked-in Power: Opal's Advantages

Opal builds upon the concepts seen in other low-code/no-code workflow tools like N8N and Lindy. However, a key advantage of Opal is its direct integration with Google’s powerful services and models:

- Google Search: Seamlessly incorporate web searches into your workflows.

- Image Generation Models: Access to models like Image Gen 4 for creative assets.

- AudioLM: The ability to generate speech, opening possibilities for creating podcasts or audio content.

- Deep Research: Integration with advanced research capabilities.

This baked-in access to Google’s ecosystem distinguishes Opal and provides a comprehensive toolkit for users.

A Glimpse into the Future of App Development

Opal is currently in public preview and is US-only, though a VPN might allow access. As a Google Labs product, it’s expected to evolve rapidly, much like Notebook LM, which gained many features over time as users engaged with it.

Opal signifies a major step in making generative AI and LLM workflows accessible to a broader audience, especially those without coding backgrounds. It allows for rapid prototyping and the creation of useful mini-apps, blurring the lines between traditional programming and intuitive, descriptive building. Furthermore, the underlying prompts and structures created in Opal can even be extracted and used as a foundation for developing full-fledged coded applications.

Keep an eye on Opal – it represents an exciting future where anyone can become an “app developer” for AI-powered tasks and automation.

What is Opal?

Opal is the latest public preview from Google Labs, a product-focused team known for developing innovative tools like Notebook LM. It is designed to make it exceptionally simple for individuals to build their own Large Language Model (LLM) workflows without needing to code. While not yet at the level of "hardcore agents," Opal allows users to chain prompts together, utilize various LLM models, and integrate accessible Google tools to prototype workflows, create proofs of concept, or quickly build mini-apps. It provides a no-code way to craft, save, and reuse these workflows for a variety of tasks.

How does Opal work to help users create LLM workflows?

Opal simplifies workflow creation through an intuitive interface. Users can either describe to Opal what they want to build (e.g., "a tool that does literature reviews on archive papers") or start by remixing existing examples from its gallery. Opal then maps out a set of steps for the workflow. These steps can include tools like searching the web or utilizing Gemini models (such as Gemini 2.5 Flash, Gemini 2.5 Pro, VO, or LIA) to perform tasks like writing outlines, drafting blog posts, or generating images. Users have extensive customization capabilities, including editing individual steps, selecting different LLM models for specific tasks, and crucial, wiring inputs between different nodes (steps) to pass information and customize outputs. A console view also provides transparency, showing exactly what each step is doing, including model calls and research findings, which can be an excellent learning tool for understanding agent and workflow construction.

What are some examples of workflows or mini-apps that can be created with Opal?

Opal comes with a gallery of examples that users can remix. One prominent example is a blog post generator, which takes a topic, searches the web for research, writes an outline, drafts the post, and even creates a banner image. Users can then refine this, for instance, by changing the image generator to Image Gen 4 or adding a "reader persona" input to heavily customize the content for a specific audience. Other examples in the gallery include "learning with YouTube," various social media content creation tools, and product research tools. Users can also describe their own desired tools from scratch, such as a tool that performs literature reviews on archive papers and finds related ones.

What advantages does Opal offer?

Opal builds upon concepts seen in other low-code/no-code workflow tools like N8N and Lindy. A key advantage of Opal is its direct integration with Google's powerful services and models. This includes seamless incorporation of Google Search, access to different image generation models (like Image Gen 4), AudioLM for generating speech (useful for podcasts or audio content), and deep research capabilities. This baked-in access to Google's ecosystem provides a comprehensive toolkit, distinguishing Opal and making it powerful for users.

What is the current status and future outlook for Opal?

Opal is currently in public preview and is US-only, though a VPN might allow access. As a Google Labs product, it is expected to evolve rapidly, much like Notebook LM, which gained many features over time as users engaged with it. Opal represents a significant step in making generative AI and LLM workflows accessible to a broader audience, especially those without coding backgrounds, allowing for rapid prototyping and the creation of useful mini-apps. Furthermore, the underlying prompts and structures created in Opal can be extracted and used as a foundation for developing full-fledged coded applications, bridging the gap between no-code development and traditional programming.

Can workflows built in Opal be translated into code for more advanced development?

Yes, one of the notable advantages mentioned is that the prompts and workflows designed within Opal can be exported or translated into code. This feature allows users to prototype and refine their LLM applications quickly in a no-code environment and then take those refined components (especially the prompts and logic) to a coding environment for more robust, scalable, or custom development. This bridges the gap between rapid prototyping and professional software development.

What are the current limitations or access requirements for Opal?

Currently, Opal is in a public preview phase and is limited to US-only access. While a VPN might allow users outside the US to access it, official availability is restricted. Users also need a Google account to access the platform. As a preview product, there might be limitations on usage quotas, and it is likely that a paid version with fewer restrictions could be introduced in the future.

Can users customize and iterate on workflows within Opal?

Yes, users have extensive capabilities to customize and iterate on workflows within Opal. Once a workflow is initially generated, either by describing it to Opal or by remixing an existing example from the gallery, users can easily go into individual steps and edit their logic or inputs. A key aspect of customization is the ability to select different Large Language Models (LLMs) for specific tasks within the workflow. For instance, while research might be conducted using Gemini 2.5 Flash, the actual writing of a blog post could be handled by Gemini 2.0 Flash, and image generation might leverage Image Gen 4. Users can also add new user inputs to their workflows, such as a "reader persona," and then wire these inputs to influence multiple nodes or steps. This means that the information provided in the new input can be dynamically passed to different parts of the workflow, ensuring the output is highly customized for the intended audience or specific requirements.

Can I customize the app logic in opal?

Yes, you can significantly customize the app logic within Opal, as the platform is designed to allow users to build and modify their own LLM (Large Language Model) workflows and "mini apps" in a no-code environment. You can start by remixing applications from a pre-built gallery, which provides a template for your modifications. Within these workflows, you have the ability to edit individual steps and their associated prompts, such as changing the instructions for generating an outline or writing a blog post. Furthermore, you can select different LLM models for specific steps, including various Gemini models (e.g., Gemini 2.5 Flash, Gemini 2.5 Pro), VO, or LIA, and even swap out tools like different image generators (e.g., from Gemini 2 flash image generator to Image Gen 4). A key customization feature is the ability to add new user inputs (e.g., "reader persona") and wire these inputs to different parts of your workflow, allowing user-provided information to influence various steps like research or content generation, making the app behavior dynamic. Opal utilizes a "node" or "no-code" approach, enabling you to connect various components or "steps" to build complex workflows, incorporating functionalities like deep research, Google search, and even the generation of video or audio using Audio LM. Beyond remixing, you can also create entirely new apps from scratch by describing what you want to build to Opal, which then generates an initial workflow that you can further customize. Ultimately, Opal empowers users to prototype and build mini-apps by chaining prompts and leveraging various models and Google tools, and for broader development, you can take the prompts and underlying logic from your Opal creations and convert them into code.

Vogue’s AI Model Sparks Debate on Beauty and Beyond

Vogue’s AI Model Sparks Debate on Beauty and Beyond The fashion world, long a trendsetter, recently unveiled its latest frontier: an Artificial Intelligence (AI) model featured in a new ad

How To Use Google VEO 3 JSON Prompt

How To Use Google VEO 3 JSON Prompt How To Use Google VEO 3 JSON Prompt In the rapidly evolving world of artificial intelligence, a new frontier in video creation